Cerebras delivers 4exaFLOP AI supercomputer

Cette publication existe aussi en Français

Cette publication existe aussi en Français

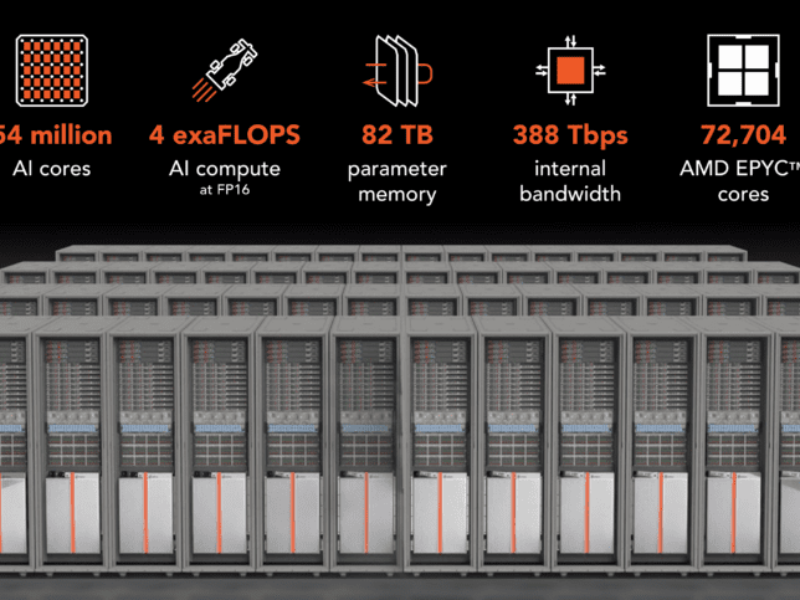

Cerebras has used its wafer-scale AI processor to deliver a 4 exaFLOP AI supercomputer in a deal worth $100m.

The Condor Galaxy 1 (CG-1) is a 64-node AI supercomputer with 54 million cores and 82Tbytes of data that can deliver 4 exaFLOPs of processing. The roadmap sees the rollout of performance up to 36 exaFLOPS,

The supercomputer was commissioned by G42, the leading AI and cloud company of the United Arab Emirates and is the first in a series of nine supercomputers to be built and operated through a strategic partnership between Cerebras and G42. Upon completion in 2024, the nine inter-connected supercomputers will have 36 ExaFLOPS of AI compute, making it one of the most powerful cloud AI supercomputers in the world.

CG-1 is located in Santa Clara California at the Colovore data centre and Cerebras is set to share the results of a new model trained on CG-1 at a conference next week.

The AI supercomputer is made possible through the Cerebras Wafer-Scale Cluster, a new system architecture that lets up to 192 Cerebras CS-2 systems be connected and operate as a single logical accelerator. The design decouples memory from compute, allowing us to deploy terabytes of memory for AI models rather than the gigabytes possible using GPUs.

This is combined with weight streaming, a new way to train large models on wafer-scale clusters using just data parallelism. This exploits the large-scale compute and memory features of the wafer-scale processing hardware and distributes work by streaming the model one layer at a time in a purely data parallel fashion.

These two technologies were used in November 2022 for the Andromeda AI supercomputer with 16 clusters than achieved 1 exaFLOP. This provided a reference design for Cerebras Wafer-Scale Clusters as well as a platform to train large generative models, enabling Cerebras to train seven open source GPT large language models in just a few weeks. This became the flagship offering of the Cerebras Cloud.

- AI software platform enables giant model training

- Cerebras announces second generation wafer-scale engine

- Exascale wafer-scale supercomputer has 13.5m cores

The base configuration supports 600 billion parameters, extendable up to 100 trillion with 386 terabits of internal cluster fabric bandwidth linking 72,704 AMD EPYC Gen 3 processor cores.

Condor Galaxy will roll out in four phases over the coming year. CG-1 today consists of 32 CS-2 systems and is up and running in the Colovore data centre in Santa Clara.

Phase 2 will double the footprint of CG-1, expanding it to 64 CS-2 systems at 4 exaFLOPS. A 64-node system represents one full supercomputer instance. Phase 3 is two more full instances across the United States, bringing the total deployed compute to 3 centers at 12 exaFLOPS.

Phase 4 will see six more supercomputing centers, bringing the full install base to 9 instances at 36 exaFLOPS of AI compute. This puts Cerebras in the top 3 companies worldwide for public AI compute infrastructure.

|

Condor Galaxy |

Phase 1 Delivered |

Phase 2 Q4 2023 |

Phase 3 H1 2024 |

Phase 4 H2 2024 |

|

ExaFLOPS |

2 |

4 |

12 |

36 |

|

CS-2 Systems |

32 |

64 |

192 |

576 |

|

Supercomputer Centers |

1 |

1 |

3 |

9 |

|

Milestone |

Largest CS-2 deployment to date |

First 64-node Cerebras AI supercomputer |

First distributed supercomputer network |

Largest distributed supercomputer network |

When fully deployed in 2024, Condor Galaxy will be one of the largest cloud AI supercomputers in the world. At 36 exaflops, this is nine times more powerful than Nvidia’s Israel-1 supercomputer and four times more powerful than Google’s largest announced TPU v4 pod.

Cerebras manages and operates CG-1 for G42 and makes it available through the Cerebras Cloud. Dedicated AI supercomputer instances for AI training are instrumental to model development.

Related AI supercomputer articles

If you enjoyed this article, you will like the following ones: don't miss them by subscribing to :

eeNews on Google News

If you enjoyed this article, you will like the following ones: don't miss them by subscribing to :

eeNews on Google News