The Future of AI Is Forged in Silicon: An Interview with Anastasiia Nosova

As AI workloads push the limits of computing, graphics card chips have taken center stage. In this interview, Elektor speaks with Anastasiia Nosova — founder of the popular tech podcast Anastasi in Tech — about the future of AI hardware, GPUs, and the innovations reshaping the semiconductor industry.

Elektor: Let’s start with the fundamentals: What is a GPU, and how did NVIDIA — once focused on graphics for gaming — evolve into a $4 trillion leader at the forefront of the AI revolution?

Anastasiia Nosova: A GPU’s architecture is key. Unlike a CPU that handles a few complex tasks sequentially, a GPU uses thousands of simple cores to perform many calculations in parallel. This design, originally for graphics, is perfectly suited for the massive matrix multiplications at the heart of AI workloads. NVIDIA’s success stems not only from delivering the right hardware at the right moment, but also from their ability to identify key market trends early, and go all in. Most critically, they made a bold, strategic investment in the CUDA software platform. CUDA became the standard for programming GPUs for AI and scientific tasks, creating a powerful software “moat” that gave them an incredible head start.

Elektor: That’s a powerful first-mover advantage. But in a market this large, is there serious competition? Why aren’t we seeing other $4 trillion chipmakers?

Anastasiia: The competition is significant and growing. Traditional rivals like AMD are offering powerful alternatives, its MI325X GPUs show strong performance and better cost-efficiency than NVIDIA’s H100 and H200 in specific large-model inference tasks. A particularly interesting trend is that the world’s largest companies by market cap — Google, Microsoft, Amazon, and Apple — are all designing their own chips, making it a key differentiator — and I break it all down on my podcast. Google alone shipped $6 billion worth of TPUs internally in 2024. Meanwhile, innovative startups like Cerebras are pushing the envelope with radical wafer-scale designs. While NVIDIA maintains a clear lead today, it is being challenged on all fronts and must continue to innovate at a rapid pace.

Elektor: With all these players building chips, are we going to see an oversupply of AI hardware anytime soon?

Anastasiia: An oversupply is highly unlikely. The demand for AI compute is exploding due to widespread adoption and, more importantly, the growing complexity of AI models. For instance, advanced reasoning models require up to 10 times more compute than traditional ones. Despite optimization efforts, the overall trend is clear: compute is estimated to grow 4× to 5× annually, far outpacing the current supply.

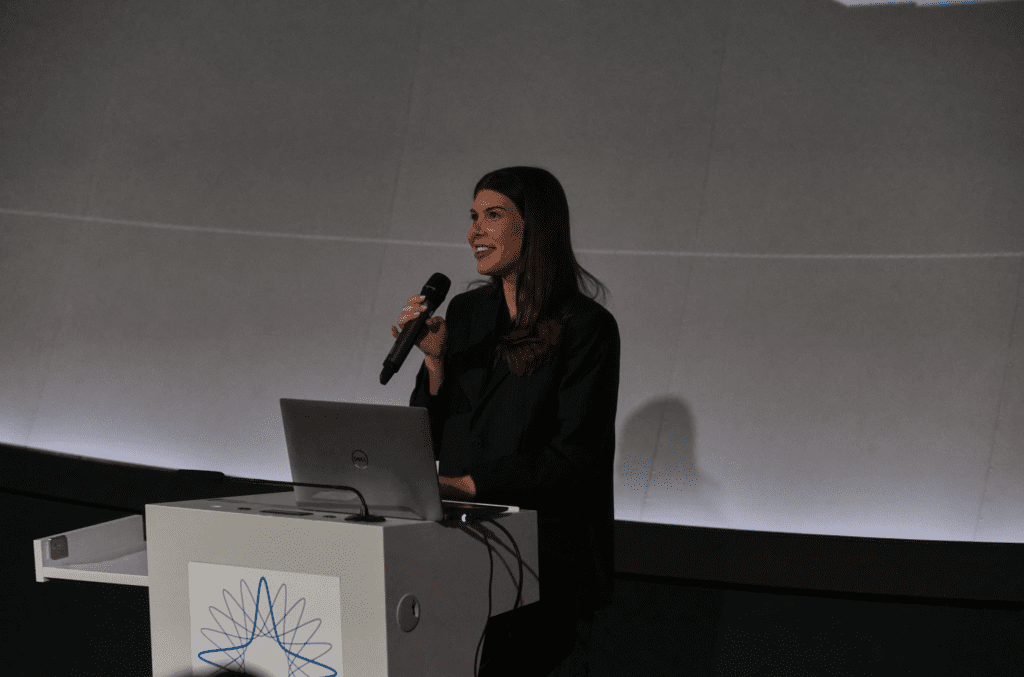

Anastasiia at the “Green Computing in the AI Era,” where she discussed energy-efficient AI, highlighting advances in photonics, quantum, and probabilistic computing.

Elektor: Are we keeping up with this staggering demand?

Anastasiia: Hardware is struggling to keep up with the pace of AI demand. Research from EpochAI shows that hardware performance is improving by only 1.3× per year, while demand is growing 4× to 5× annually. Building more data centers isn’t a sustainable solution in the long run. These new “AI gigafactories” already consume massive amounts of power, 500 megawatts and rising. At the same time, networking and compute bottlenecks are becoming increasingly severe. It’s clear that incremental improvements won’t be enough. We need fundamental innovations in computing.

Elektor: You mentioned networking. Why is it becoming so critical?

Anastasiia: In AI data centers, thousands of GPUs must work together as one large, distributed system, constantly exchanging huge amounts of data with minimal delay. Any latency or bandwidth bottleneck slows down the entire pipeline, no matter how powerful each GPU is. Traditional electrical interconnects are becoming a severe bottleneck, hitting physical limits on speed and power consumption. The clear solution is optics. Light-based communication is now moving from connecting servers (“scale-out”) to connecting chips within a single system (“scale-up”). This shift, with technologies like co-packaged optics, is key to unlocking the next level of performance.

An even bigger challenge in scaling AI data centers is power, not just the amount, but how efficiently it’s used. These facilities demand enormous and continuous electricity, often exceeding what local power grids can supply. I’ve been talking about this for a while on my YouTube channel. Whoever secures access to cheap, abundant power will ultimately win the AI race.

Elektor: It seems every so often we hear news that “Moore’s Law is dead,” but then a new, smaller “nanometer technology” is announced. Can you comment on this cycle?

Anastasiia: Moore’s Law isn’t dead, but it has certainly evolved. Around the 22 nm node, the industry moved from 2D “planar” transistors to 3D FinFET structures to overcome physical limits. Now, next-gen AMD and Apple chips are adopting “nanosheet” transistors, marking the next milestone in transistor scaling. Today, progress is less about shrinking and more about smart 3D designs that boost performance and density. In the next decade, however, the real leaps in performance may come from new materials, such as 2D and carbon-based materials. Eventually, this all leads to the idea of CMOS 2.0, a new way of building chips where each layer is optimized for a specific task. Instead of cramming everything into a single flat chip (as we’ve done for decades), we can use the best material and technology for each layer, whether it’s for AI, graphics, or something else.

Elektor: Looking ahead, which specific technology do you see as the most transformative for the future of AI computing?

Anastasiia: The future won’t be defined by a single silver bullet, but by a combination of technologies. To move forward, we need to advance all three pillars of AI hardware together: compute, memory, and interconnect. Improving just one creates new bottlenecks in the others. Personally, I’m most excited about the potential of silicon photonics, not just for connecting chips, but for computing itself. Doing calculations with light offers major gains in speed and energy efficiency. I believe the next big leap in AI hardware will come from tightly integrating photonics with electronics.

Elektor: For engineers and startups looking to enter the AI hardware space today, where are the biggest opportunities still open?

Anastasiia: This is a very interesting question. In my opinion, the most fertile ground is at the intersection of electronics and photonics. I am personally very interested in optical computing; there are still immense performance and efficiency gains to be explored in that domain. Another area, which is often overlooked but absolutely critical, is power conversion and delivery. The challenge of efficiently converting an 800 V DC supply down to the 0.7 V needed by the processor core is immense. Furthermore, advanced technologies like backside power delivery networks will flip traditional chip power design upside down, creating a huge moment for innovation. Finally, the developing chiplet ecosystem will open new doors, allowing startups to design specialized components and compete in the hardware race without the prohibitive cost of designing a full, monolithic system-on-chip.

Elektor: Is there a risk that the AI compute race becomes too centralized, with only a few hyperscalers or labs controlling access to the most powerful systems?

Anastasiia: Absolutely, that’s already happening. However, I would be more concerned about the concentration of compute in the hands of a few leading AI labs rather than just the hyperscalers who provide the infrastructure. The open-source strategies we see from some labs are a very positive and viable way to democratize access to powerful AI. But we have to be realistic. If there is a rapid, discontinuous advance in AI performance from one of the closed-source labs, a centralization of “power” is somehow inevitable. From a European perspective, in my opinion, we should be treating this as a matter of strategic autonomy and definitely invest more capital in chip development, data centers and AI capabilities.

Editor’s note: eeNews Europe is an Elektor International Media publication.

If you enjoyed this article, you will like the following ones: don't miss them by subscribing to :

eeNews on Google News

If you enjoyed this article, you will like the following ones: don't miss them by subscribing to :

eeNews on Google News