Close encounter of the haptic type

The retro-active force allows the system to “resist” the user’s input based on what the user ought to feel when interacting with the objects shown on a screen. The force feedback can be calculated based on simulated material properties (for example when interacting with virtual objects), or it can be derived from real sensor data, for example when the user is operating a robot remotely.

Two industrial sponsors of this year’s EuroHaptics, Force Dimension and Haption are well established players in this market, offering joystick-like interfaces with resistive forces and torques felt across multiple degrees of freedom.

The perception you get is bluffing as you manipulate virtual objects in 3D, with hard and soft boundaries, weights and elastic behaviour. When operating robots, the scaling of movement and forces can be tuned arbitrarily in software. In the case of robotic surgery, appropriate software can filter out tremors and breathing movements for added precision.

In academia, new shapes and implementations crop up, such as the adaptive interface presented by researchers of the Kyung Hee University (Korea). By controlling the size of a movable silicone balloon (inflated through a pneumatic actuator in accordance with the size of a virtual object being touched) and with finger video tracking, the researchers recreate the sensation of a real interaction with soft round-shaped objects.

(a) The concept of this pneumatic haptic interface, (b-d) the size of the balloon adjusted to fit the contact area, and (e-g) the user can grasp the interface and move the hand to feel the variations in size.

The balloon is mounted on a position-controlled robotic arm so as to adapt its position to follows the user’s hand and get in contact only when the both the hand and the object are making contact in the virtual world. This system could be used to interact with a human avatar, or to palpate virtual organs, say for medical training purposes.

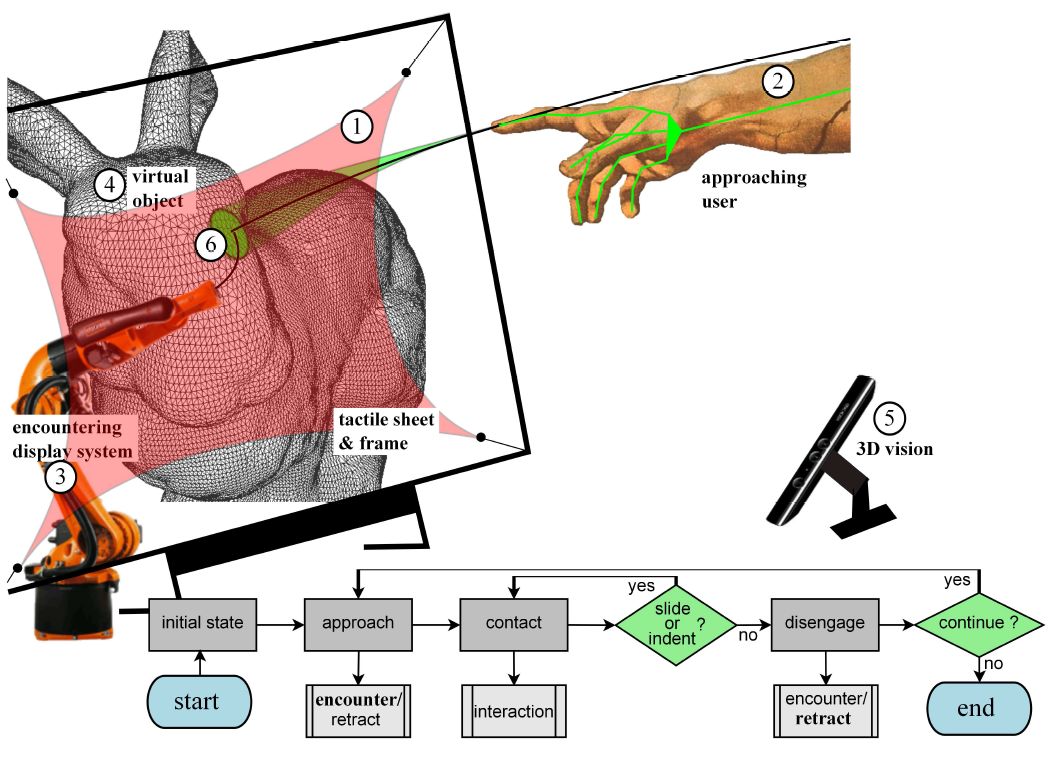

The solution unveiled by researchers of the University of Leuven (Belgium) in their paper “Towards Palpation in Virtual Reality by an Encountered-type Haptic Screen” is somewhat a hybrid approach between a screen and the encountered-type interface described earlier.

To summarize it simply, the joystick is replaced with a robot-arm-mounted screen featuring a tensioned polyethylene sheet in front of the user for adequate compliance. Markers both on the user’s index and on the screen’s frame, allow a camera to precisely track the index’ position relative to the screen.

Concept and flow chart of large VR object palpation through encountering display.

Not only the screen follows the index position, but the tracking system also computes how much stiffness there ought to be in the interfacing sheet when the user comes into contact and moves over or inside the surface, to match the impedance of the soft virtual object being touched.

Other virtual 3D manipulation haptic interfaces can take the shape of multiple finger straps tied to various strings whose tension is precisely controlled by motors. Such arrangements, dubbed SPIDAR (Space Interface Device for Artificial Reality) by researchers from the Tokyo Institute of Technology, can be rather complex but can offer full digit operation, as demonstrated with their SPIDAR-10.

The wire-driven multi-finger haptic interface can render a 3 degree-of-freedom spatial force feedback on all ten fingers through 4 wires attached to each fingertip.

For the unconstrained manipulation of virtual objects, with both hands, rotary frames support the string actuators that allow users to twist their hands (say to solve a virtual Rubik’s cube), rotating with the user’s hands to reduce the interference of the wires.

To round up this report, I would like to highlight two fresh startups.

Spun-out from the Institut des Systèmes Intelligents et de Robotique (ISIR) in Paris and co-founded by professor Vincent Hayward, General Chair of the EuroHaptics 2014 conference, Actronika was demonstrating haptic effects based on a seemingly simple idea, the use of recorded sounds through a vibrotactile transducer to add the haptic effect corresponding to what would offer the real world experience.

In one demonstration, you were holding a chopstick fitted with a Haptuator (a high-bandwidth 9x9x32mm vibro-tactile transducer from Canadian company TactileLabs) while looking at a video of a stylus pushing its way through a box full of marbles.

By driving the Haptuator with the original audio recordings of the video, just like a common loudspeaker but only with acceleration outputs, pushing the vibro-tactile chopstick on a flat screen gave the exact sensation of pushing it through colliding marbles.

Another demonstration consisted of the same Haptuator mounted underneath an empty plastic glass. As water was poured into the glass and moved around in the video, the haptic effects rendered through the empty glass truly gave the impression that you were holding the real thing.

Bluffing, yet, I am not sure how this would be implemented in commercial applications. Actronika was officially registered only the day before the conference started and freshly named CEO Rafal Pijewski, a former mechanical design engineer at ISIR, was happy to discuss potential applications.

“At this stage, we are only proving a concept so we have yet to see how this would be commercialized”, told us Pijewski, “but we want to show how one can easily add haptic effects to any object and match any displayed content, real or virtual”.

The company is building libraries of haptic effects based on this principle and encourages an open-source model so that more developers and companies can develop and exchange these haptic effects. Actronika would act as a consultancy and get royalties for licensing the IP. “We’ll first go through an incubation period to identify in which markets we can best develop our activity”, added Pijewski.

One application I can think off is TV advertising, where viewers would get these haptic sensations through their remote control, but that could also apply to video games or to movies for better immersion (with Haptuators embedded in your seat).

A year-old startup, Elitac is busy selling waist-belts and various bands fitted with tiny vibration motors whose modular configuration is easily re-organized using Velcro straps. The vibration motors are controlled through a Bluetooth-enabled unit, making these tactile belts a customizable tool for research on haptics.

The  company commercializes a Science Suit Kit and various add-ons, but it is also exploring commercial applications of their own. One application under development at Elitac is a haptic GPS-guidance system for motorbikers.

company commercializes a Science Suit Kit and various add-ons, but it is also exploring commercial applications of their own. One application under development at Elitac is a haptic GPS-guidance system for motorbikers.

With localized vibrations, the waist-strap indicates the wearer when to turn right or left, or warns about a wrong path. More information could be encoded of course, it is just a matter of finding the appropriate combinations that are the most intuitive to interpret.

“Such haptic feedback doesn’t require the motorbiker to look at a GPS screen, it doesn’t distract the rider with voice commands either” told us Jasper Dijkman, Elitac’s commercial director. Ideally, the company would strike a partnership with a motorcycle jacket manufacturer.

The company is also working on the RANGE-IT project to combine a stand-alone wearable haptic interface with 3D camera hardware and image recognition software, for detecting and presenting close range objects (less than 7m away) to the wearer, in any indoor space. This could serve visually-impaired people but also firemen or any other rescue staff in difficult environments.

At the conference, one paper was suggesting that such haptics could improve wearable athlete coaching units, as athletes could receive direct feedback during their performance (rather than looking at their watch or analysing data afterwards). An entire routine could be programmed with thresholds to cross and performance levels to maintain, with haptic effects to validate when these performance levels are reached or not.

Related articles:

Augmented reality gets physical with haptics

Haptic screens bulge with sensory information

NXP backs Senseg’s electrostatic touch

Haptic lens converts light into touch

Aito raises €2m for piezo sensors

Haptic display/keyboard uses microfluidics to create buttons when needed

If you enjoyed this article, you will like the following ones: don't miss them by subscribing to :

eeNews on Google News

If you enjoyed this article, you will like the following ones: don't miss them by subscribing to :

eeNews on Google News