Google’s deep learning comes to Movidius

In an interview with EE Times, Remi El-Ouazzane, CEO, Movidius, called the agreement “a new chapter” in the partnership.

In Project Tango, Google used a Movidius chip in a platform that uses computer vision for positioning and motion tracking. The project’s mission was to allow app developers to create user experience that works on indoor navigation, 3D mapping, physical space measurement, augmented reality and recognition of known environments.

The new agreement with Google is all about machine learning. It’s intended to bring super intelligent models—extracted from deep learning at Google’s data centers – over to mobile and wearable devices.

El-Ouazzane said Google will purchase Movidius computer vision SoCs and license the entire Movidius software development environment, including tools and libraries.

Google will deploy its advanced neural computation engine on a Movidius computer vision platform.

Movidius’ vision processor will then “detect, identify, classify and recognize objects, and generate highly accurate data, even when objects are in occlusion,” El-Ouazzane explained. “All of this is done locally without Internet connection,” he added.

The public endorsement from Google will boost the startup, said Jeff Bier, a founder of the Embedded Vision Alliance. The announcement is also “interesting,” he added, because it shows “Google has a serious interest in [the use of deep learning for] mobile and embedded devices.” It demonstrates that Google’s commercial interest in artificial neural networks isn’t limited to their use in data centers.

Different teams within Google, including its machine intelligence group (Seattle), are involved in this agreement with Movidius. Google will be developing commercial applications for deep learning. Movidius is “likely to get more input from Google, and get opportunities – over time – to optimize its SoC for Google’s evolving software,” Bier speculated.

Movidius’ agreement with Google is unique. “Not everyone has access to Google’s well-trained neural networks,” said El-Ouazzane, let alone the opportunity to collaborate on computer vision with the world’s most prominent developer of machine intelligence.

Next: key to autonomous cars?

Asked if the work with Google involves the development of embedded vision chips for autonomous cars (i.e. Google Cars), Movidius CEO El-Ouazzane said, “Google intends to launch a series of new products [based on the technology]. I can’t speak on their behalf. But the underlying technology – high quality, ultra-low power for embedded vision computing – is very similar” whether applied to cars or mobile devices.

For now, however, Movidius’ priority is getting its chip into mobile and wearable devices. El-Ouazzane said, “Our [embedded vision SoCs] are to the IoT space, as Mobileye’ chips are to the automotive market.” Mobileye today has the lion’s share of the vision chip market for Advanced Driver’s Assistance Systems.

The search giant is hungry for technology to “recognize human speech, objects, images, emotion on people’s faces, or even fraud detection,” explained Bier. “Google has a commercial interest in understanding context better, when people are searching for certain things."

However, both physical objects and human emotions are ambiguous, and they come with infinite variability, Bier said.

They pose hard problems for computers that tend to seek solutions "in formulaic ways." These are the tasks "we don’t know how to write instructions for. They have to be learned by examples," as Blaise Agϋera y Arcas, head of Google’s machine intelligence group in Seattle, said in Movidius’ promotional video.

In recent years, though, "machine learning is cracking the problem," said Bier.

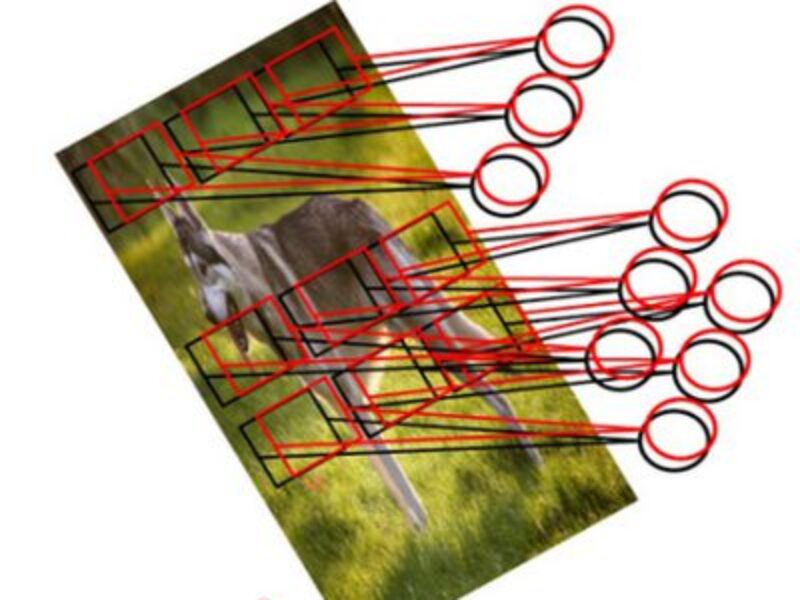

Before the emergence of Convolutional Neural Networks (CNN) in computer vision, algorithm designers had to design many decisions through many layers and steps with vision algorithms. Such decisions include the type of classifier used for object detection and methods to build an aggregation of features.

Next: Let deep learning decide

In other words, as Bier summed up: "Traditional computer vision took a very procedural approach in detecting objects." With deep learning, however, designers "don’t have to tell computers where to look. Deep learning will make decisions for them."

The segments of the image are fed through nodes of the convolutional network. They start to differentiate not at the pixel level, but at the feature level (ie. Paws, whisker, etc). CNN is far better at classification than older approaches where the programmer must explicitly code a set of rules. (Source: Movidius)

With all the learning and training carried out in the artificial neural network, "Computers are now gaining intuition," said Bier.

Obviously, Google wants to expand this technology beyond the data center, by bringing it to mobile devices used in the real world.

Next: Keeping decisions local

In theory, Google’s Android device, equipped with an embedded vision-processing chip, would know more about its user and predict better the user’s needs. It could intuit and provide what the user wants, even when the device is offline.

Picture, for example, a swarm of mobile, wearable devices or even drones equipped with Movidius’ embedded vision SoC, said El-Ouazzane. They can autonomously classify and recognize objects in an unsupervised manner. And they don’t have to go back to the cloud. When connected, the devices can send less detailed metadata back to the trained network. The network in return sends back updated layers and weights learned in the artificial neural network.

"Deep learning isn’t a science project" for Google, said Bier. "It gives Google clear, commercial advantage for its business."

The alliance with Movidius won’t preclude Google from working with other embedded vision SoC vendors.

Surprisingly, though, very few embedded vision SoCs are on the market today, said Bier, although there are silicon IPs now available from companies like Ceva, Cadence and Synopsys.

Chip vendors are using everything from CPU and GPU to FPGA and DSP to enable CNN on vision SoCs. But when it comes to chips optimized for embedded devices like wearables, “You need a chip that costs less and runs at very low power,” Bier said.

Qualcomm is offering embedded vision on its “Zeroth platform,” a cognitive-capable platform. Some system vendors are using Xilinx Zynq FPGA for it. Cognivue, now a part of NXP Semiconductors, claims a chip designed for parallel processing of sophisticated Deep Learning (CNN) classifiers. But NXP’s focus is solely set on automotive, a growing market dominated by Mobileye.

Next: A swerve towards CNNs

Movidius CEO stressed, “It’s one thing to talk about theories, but it’s another to deploy CNN on a commercially available chip on milliwatts of power.”

El-Ouazzane acknowledged that when the company first developed its computer vision chip, “We weren’t necessarily thinking about CNN.” But in the last few years, “we’ve seen the industry’s accelerated interest in the use of CNN in the cloud.” It turns out that Movidius’ chip, serendipitously, is well designed for artificial intelligence neural networks.

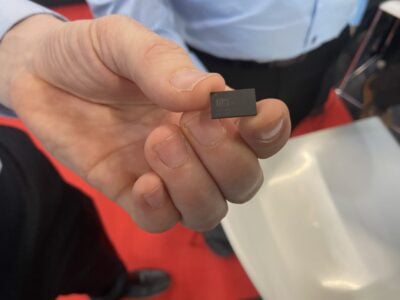

Google picked Movidius because the startup’s latest chip, MA2450, is the only chip commercially available that can do machine learning on the device.

MA2450 is a member of Movidius’ Myriad 2 family of vision processing unit SoCs.

Myriad 2’s special micro-architecture (Source: Movidius) Click here for larger image

Myriad 2 provides “exceptionally high sustainable on-chip data and instruction bandwidth to support the twelve processors, 2 RISC processors and high-performance video hardware accelerators,” according to the company.

Next: A close SHAVE

In particular, it contains a proprietary processor called SHAVE (Streaming Hybrid Architecture Vector Engine). SHAVE contains wide and deep register-files coupled with a Variable-Length Long Instruction-Word (VLLIW) controlling multiple functional units including extensive SIMD capability for high parallelism and throughput at both functional unit and processor levels.

The SHAVE processor is “a hybrid stream processor architecture combining the best features of GPUs, DSPs and RISC with both 8/16/32 bit integer and 16/32 bit floating point arithmetic as well as unique features such as hardware support for sparse data structures,” explained Movidius.

MA2450 is an improvement on the company’s MA2100, according to El-Ouazzane. The MA2450 uses TSMC’s 28nm HPC process, while MA2100 uses TSMC 28nm HPM. Computer vision power in MA2450 is one-fifth of that of MA2100 and the power of MA2450’s SHAVE processors has been reduced by 35 percent. MA2450’s memory increased to 4GDRAMigabit DRAM from MA2100’s 1Gbit. The 2 32-bit RISC processors inside MA2450 run at 600MHz, compared to 500Mhz in the older SoC.

Meanwhile, the industry’s interest in deep-learning-based computer vision is rapidly growing. Bier said the Embedded Vision Alliance is offering a tutorial on CNN and a hands-on introduction to the popular open source Caffe framework on February 22nd with primary Caffe developers from the Berkeley Vision and Learning Center at U.C. Berkeley.

Related links and articles:

News articles:

How will deep learning change SoCs?

3D camera-computer does all the vision processing you want

Quantum computing near and disruptive, warns academic at Davos

The analog way for quantum computing

If you enjoyed this article, you will like the following ones: don't miss them by subscribing to :

eeNews on Google News

If you enjoyed this article, you will like the following ones: don't miss them by subscribing to :

eeNews on Google News