World’s largest neuromorphic supercomputer aims at 10bn neurons

SpiNNcloud Systems in Germany is developing a neuromorphic supercomputer with up to 10bn neurons.

The SpiNNaker2 system is part of a €1bn European project over the last decade building on the work of Prof Steve Furber, one of the founders of ARM.

The SpiNNaker2 neuromorphic supercomputer has been designed to handle event driven neural networks, deep neural networks and symbolic rule-based AI. This differs from the Hala Point system launched by Intel last month with 1bn neurons.

“We are introducing the largest commercially available hybrid supercomputer that combines event based properties as well as distributed deep neural networks and rule based engines as you have the flexibility on the ARM cores to exchange information in real time,” Hector Gonzalez co-CEO of SpiNNcloud tells eeNews Europe.

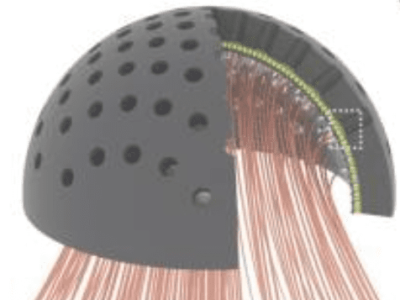

The initial half-size implementation being built in Dresden, Germany, will have up to 5bn neurons. This uses a custom chip with 152 ARM M4F microcontroller cores built on the 22nm silicon on insulator FDX process at Global Foundries using adaptive back bias (ABB) to reduce the power consumption.

There are also neural network accelerators cores and memory management cores on the periphery as well as exponential and logarithmic functions and two true random number generators (RNG) that sample the thermal noise of the PLL in each core for stochastic or random walk operations.

- SpiNNaker2 spiking neural network project gets €2.5m

- Intel breaks a billion neurons for world’s largest neuromorphic computer

There are 48 chips on a card, 90 cards in a rack and eight racks, giving a total of 5.25m cores.

“This 5m core supercomputer has 5bn neurons as there are 1000 neurons per core but 10m cores is the maximum size that we are offering commercially with 1440 boards – the limitation is the routing and maintaining the real time performance. The original system was updates in 1ms but the larger you are the more difficult it is. With 1440 boards we can still update in 1ms.”

The system uses a mixture of synchronous operation at the chip level and asynchronous communications between boards and racks. The voltage of the chips can be varied dynamically between the threshold of 0.45V and 0.6V depending on the data requirements, for example for a spike of data travelling through the system, and the frequency can also be varied dynamically.

“It’s energy efficient computing but you also get AI and the ability to operate the code in an event based way, not only around the spiking framework but we can also use sparsity at different scales, not only for computation but also at the communication level and across different networks,” said Gonzalez. “This is something that is very difficult to do in a GPU architecture.”

The neuromorphic supercomputer will be available on the cloud and can host many different types of AI framework at the same time for sensor networks or to improve the accuracy and safety of generative AI.

“We have had conversations with people in smart cities interested in real time processing of sensor streams, drug discovery using small networks in parallel and this fits nicely into the machine,” said Gonzalez.

“You can scale up the symbolic engines as well as the DNN that can be YOLO or a GPT layer for LLMs and these can be very good at not hallucinating, dealing with incomplete data, this can also be used with sensor streams with reasoning.”

“We are also very interested in running the brain models that are normally very hard to run in other networks because of the connectivity requirements.”

One of the first customers is Sandia National Laboratory in the US. “Brain-like computation requires programmable dynamics, event-based communication, and extreme scale,” said Fred Rothganger from Sandia National Labs. “SpiNNaker2 is the most flexible neural supercomputer architecture available today. At Sandia, we are excited to build applications on this amazing system.”

- Innatera shows RISC-V neuromorphic edge AI . chip

- SynSense boosts $10m round for 3D neuromorphic processor

Furber’s group at the University of Manchester has been developing the software stack for the supercomputer over the last seven years, combining tools for spiking networks, graph-based DNNs and symbolic logic through the TVM interface developed by the University of Washington in the US.

If you enjoyed this article, you will like the following ones: don't miss them by subscribing to :

eeNews on Google News

If you enjoyed this article, you will like the following ones: don't miss them by subscribing to :

eeNews on Google News