The challenge at the edge of memory

If data is the new oil, then artificial intelligence (AI) is what will process data into a truly invaluable asset. It’s this belief that’s causing the demand for AI applications to explode right now. According to PwC and MMC Ventures, funding for AI startups is rapidly increasing, reaching over $9 billion last year with tech startups that have some type of AI component receiving up to 50% more funding compared to others. This intense investment has led to rapid innovation and advances for AI technology. But the traditional AI use model of “sweep it up and send it to the cloud” is breaking down as latency or energy consumption can make transmission impractical. Another major challenge is that consumers are increasingly uncomfortable having their private data in the cloud potentially exposed to the world.

For those reasons, AI applications are being pushed out of their normal data-center environments, allowing their intelligence to reside at the edge of the network. As a result, mobile and IoT devices are becoming “smarter,” and a whole variety of sensors—especially security cameras—are taking up residence at the edge. However, this is where hardware constraints are beginning to cripple innovation.

Increasing the amount of intelligence living at the edge requires much more computational power and memory compared to traditional processors. Studies have repeatedly shown that AI model inference accuracy strictly relies on the amount of hardware resources available. Since customers require ever-higher accuracy—for example, voice detection has evolved to multifaceted speech and vocal pattern recognition—this particular problem only continues to intensify as the complexity increases with these AI models.

One significant concern is simply the need for electrical power. Arm has predicted that there will be 1 trillion connected devices by the 2030s. If each smart device consumes 1W (security cameras consume more), then all of these devices combined will consume 1 terawatt (TW) of power. This isn’t simply an “add a bigger battery” problem, either. For context, the total generating capacity of the U.S. in 2018 was only slightly higher at 1.2 TW. These ubiquitous devices, individually insignificant, will create an aggregate power catastrophe.

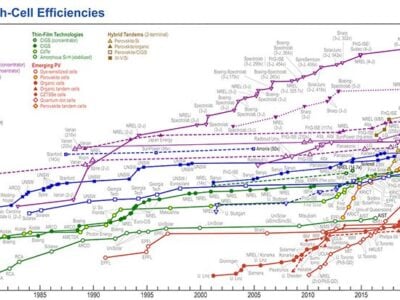

Of course, the goal is to never let the power problem get to that point. AI developers are simplifying their models, and hardware power efficiency continually improves via Moore’s Law and clever circuit designs. However, one of the major challenges remains the legacy memory technology, SRAM and DRAM (static and dynamic RAM, respectively). These memories are hitting a wall on size and power efficiencies and now often dominate system power consumption and cost.

The edge-computing conundrum

The core promise of AI is also its biggest challenge for the edge: the model needs to be constantly adapting and improving. Not only do AI models require a colossal amount of data and time to learn, but they’re never truly “done.” If it was that simple, self-driving cars wouldn’t still have so much difficulty simply getting out of a parking lot.

Even when AI models have been transitioned to the edge, they still need to be capable of continuing to learn and develop without the hardware amenities available in a data-center environment. These models need to continue tuning themselves while minimizing processing power and energy consumption and simultaneously optimizing and maximizing the available local storage. Some applications will minimize this requirement by improving the AI model in a cloud environment, and then frequently updating the edge device with the latest model version.

More interesting, though, are hybrid approaches, such as Google’s Federated Learning Model, which enable the model to be optimized using local data. This requires robust edge compute power to support incredibly frequent neural-network model updates from the cloud. However, since the learning is never “complete,” the model must consistently devote substantial processing power and memory to continue improving.

This is precisely the problem, as the AI models struggle to accomplish these goals at the edge.

Memory: it’s where the power goes

As the apocryphal story goes, when the infamous bank robber Willie Sutton was asked why he chose banks as his targets, he replied “because that’s where the money is.” For many edge AI devices, most of the power is consumed in the memory system. AI processing—especially training—is very memory-hungry and utilizing off-chip memory has become a necessity to keep up with performance improvements. Google has found that in a mobile system over 60% of the total system power budget is used to transfer data back and forth between on- and off-chip memories. This is more than the processing, sensing, and all other functions combined.

The obvious answer is therefore to eliminate these data transfers by putting all of the memory on-chip. However the current on-chip memory of choice, SRAM, is simply too large and power-hungry. If transferring data off-chip is the biggest power hog, close behind it is the power consumed by the SRAM on-chip memory. And due to SRAM’s large size, one quickly runs out of area on the chip to add enough memory for AI applications.

To make AI at the edge truly successful, memory must be able to address performance demands on-chip and perform perception tasks locally, with high accuracy and energy efficiency.

New memory for the edge

All of these factors have made the AI landscape a fertile ground for experimentation and innovation with new memories that have unique or improving characteristics. Hardware is becoming the key performance bottleneck, and solutions to the bottlenecks become differentiators. That’s the reason why leading internet players, such as Google, Facebook, Amazon, and Apple, are rushing to become silicon designers in search of a hardware competitive edge. Hardware has emerged as the new AI battlefield. Necessity begets invention, and the necessity for faster AI chips that use less power has opened opportunities for potentially denser, more efficient memory technologies.

One such promising technology is magnetic RAM (MRAM), a memory that’s bound to cross paths with AI as it rapidly moves toward higher density, energy efficiency, endurance, and yields. The semiconductor industry is beginning to invest heavily in MRAM, as the technology’s potential slowly becomes reality. Initial research has shown it offers a number of benefits that are ideal for intelligent edge applications.

The ubiquitous on-chip working memory today is SRAM, but it has flaws. It’s the largest memory type, meaning it’s the most expensive per bit, and every bit “leaks” (wastes power) whenever the memory is powered on. MRAM is the only promising new memory that has the speed and endurance to replace SRAM.

Since MRAM uses a very small memory bitcell, it can be three to four times denser than SRAM, allowing for more memory to reside on-chip and thus eliminating or reducing the need to shuttle data off-chip. MRAM, is also non-volatile, meaning that data is retained even when the power is shut off. This virtually eliminates memory leakage, which is critical for applications where the AI chip remains idle for extended periods of time. MRAM isn’t the only memory getting attention. The demand for AI applications and intelligence at the edge is leading a memory revolution within the semiconductor industry for a wide variety of applications.

Other new high-density non-volatile memories, such as 3D XPoint (Intel’s Optane), phase-change memory (PCM), and resistive memory (ReRAM) also bring new possibilities and unique advantages for storage applications. While neither as fast or high-endurance as MRAM, and therefore not replacements for SRAM, these non-volatile technologies are extremely dense and provide unique speed and power advantages over flash memory.

In addition, significant neuromorphic research is investigating using these new memory bitcells directly as the synapses and/or neurons of a neural net. Most research is focusing on ReRAM, although other technologies such as MRAM are also being explored.

For the first time in decades, silicon startups are shaping our future with new, innovative memory technologies. MRAM and the other memories are the catalysts that will change the possibilities of modern technology and applications.

This article first appeared on Electronic Design – www.electronicdesign.com

About the author:

Jeff Lewis is Senior VP of Business Development at Spin Memory

Related articles:

MRAM could store data in nanoseconds

19 European partners rally on next-gen neuromorphic memory

Spin Transfer claims MRAM breakthrough

If you enjoyed this article, you will like the following ones: don't miss them by subscribing to :

eeNews on Google News

If you enjoyed this article, you will like the following ones: don't miss them by subscribing to :

eeNews on Google News