AImotive double performance in fourth generation AI core

Leading AI technology developer AImotive in Hungary has developed its next generation neural network processing unit (NPU) design, doubling performance over the previous design.

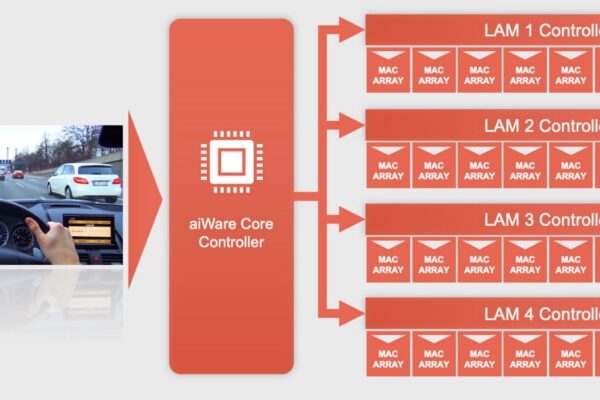

The fourth generation of the aiWare automotive NPU hardware IP delivers up to 64 TOPS per core at 2GHz, twice that of the previous design. It handles Up to 16,384 INT8 operations with 32bit internal accuracy. It is aimed at chip designs built in 5nm and 3nm process technologies. The company has a key relationship with chip maker NextChip in Taiwan.

The power-performance has been improved, with ISO26262 ASIL-B safety support as standard. There are configurable safety mechanisms up to ASIL-D, enabling balance between silicon overhead and functional safety requirements and objectives.

Each core is scalable up to 64 TOPs and up to 256 TOPS per multi-core cluster, with greater configurability of on-chip memory, hardware safety mechanisms and external/shared memory support

Enhanced standard hardware features and related documentation ensuring straightforward ISO26262 ASIL B compliant and higher compliance for both SEooC (Safety Element out of Context) and in-context safety element applications.

The core demonstrates 8-10 Effective TOPS/W for typical CNN neural network frameworks, with a theoretical peak up to 30 TOPS/W using a 5nm or smaller process node. AImotive says the core is up to 98 percent efficient for a wider range of CNN topologies, in particular the popular vgg16 and Yolo image frameworks. This was shown on the aiWare3 with NextChip.

This efficiency comes from MAC arrays that are optimized for 2D and 3D convolution and deconvolution without using matrix multipliers. It also uses a new memory architecture called Wavefront RAM (WFRAM) with interleaved multi-tasking scheduling algorithms. This is a technque developed for GPU data processing that more parallel execution with improved multi-tasking capability. It gives substantial reductions in memory bandwidth compared to aiWare3 for CNNs requiring access to significant external memory resources.

This combination enables aiWare4 to execute a wide range of CNN workloads using only on-chip SRAM for single-chip edge AI or more highly-optimized ASIC or SoC applications.

The design also includes more flexible power domains enabling dynamic power management able to respond to real-time context changes without needing to restart

“aiWare4 builds on the extensive experience we gained from working with our silicon and automotive partners, as well as insights from our aiDrive team into the latest trends and techniques driving the latest thinking in CNNs for automotive applications,” said Marton Feher, SVP hardware engineering for AImotive. “We offer the industry’s most efficient NPU for automotive inference and have now extended aiWare’s capabilities to achieve new levels of safety, flexibility, low-power operation and performance under the most demanding automotive operating environments.”

AImotive will be shipping aiWare4 RTL to lead customers starting Q3 2021.

Related AImotive articles

- Teams with MathWorks teams on AI simulation

- Teams with ON Semiconductor for sensor fusion

- European tech for Sony Vision-S electric car – video

Other AI articles on eeNews Europe

- Three AI smart city trials start in Rome

- $73m deal creates European automotive AI juggernaut

- Automotive edge AI chip designer Recogni raises $49m

- RISC-V boom from edge AI says Facebook’s chief scientist

Popular articles on eeNews Europe

- Europe looks to the end of the mobile phone

- Newport Wafer Fab faces Chinese takeover

- Swiss centre aims for 100 qubit quantum computer

- Boom quarter for top 10 semiconductor companies

- 22 new 200mm fabs in capacity boom

If you enjoyed this article, you will like the following ones: don't miss them by subscribing to :

eeNews on Google News

If you enjoyed this article, you will like the following ones: don't miss them by subscribing to :

eeNews on Google News