Cerebras shows third generation wafer scale AI processor

Cette publication existe aussi en Français

Cette publication existe aussi en Français

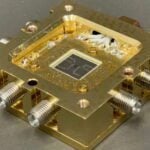

Cerebras has launched its third-generation wafer-scale AI accelerator custom built to train the most advanced AI models.

A deal with Qualcomm this week will see the models trained on the Cerebras CS-3 accelerator work on inference engines.

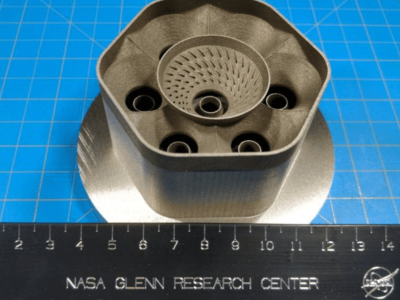

The CS-3 accelerator has over 4 trillion transistors in 900,00 cores – 57x more than the largest GPU – and is 2x faster than its predecessor and sets records in training large language and multi-modal models.

- Cerebras delivers 4exaFLOP AI supercomputer

- Cerebras announces second generation wafer-scale AI processor

The SwarmX interconnect allows up to 2048 CS-3 systems to be linked together to build hyperscale AI supercomputers of up to a quarter of a zettaflops (10^21). The CS-3 can be configured with up to 1,200 terabytes of external memory, allowing a single system to train models of up to 24 trillion parameters, paving the way for ML researchers to build models 10x larger than GPT-4 and Claude.

The Cerebras CS-3 was designed to accelerate the latest large AI models. Each CS-3 core has 8-wide FP16 SIMD units, a 2x increase over CS-2 and also boosts performance for non-linear arithmetic operations and increased memory and bandwidth per core. The Condor Galaxy 3 will be the first CS-3 powered AI supercomputer built in collaboration with partner G42, and will be operational in Q2 2024 in Dallas, Texas.

In real world testing using Llama 2, Falcon 40B, MPT-30B, and multi-modal models, Cerebras measured up to twice the tokens/second versus the CS-2 without an increase in power or cost.

Large language models such as GPT-4 and Gemini are growing in size by 10x per year. To keep up with ever escalating compute and memory requirements requires increased scalability of clusters.

While CS-2 supported clusters of up to 192 systems, CS-3 supports clusters of 2048 systems – a 10x improvement. A full cluster of 2048 CS-3s delivers 256 exaflops of AI compute and can train Llama2-70B from scratch in less than a day. In comparison, Llama2-70B took approximately a month to train on Meta’s GPU cluster.

Unlike GPUs, Cerebras Wafer Scale Clusters de-couple compute and memory components, allowing us to easily scale up memory capacity in our MemoryX units. Cerebras CS-2 clusters supported 1.5TB and 12TB MemoryX units.

CS-3 increases the MemoryX options to include 24TB and 36TB SKUs for enterprise customers and 120TB, and 1,200 TB options for hyperscalers. The 1,200 TB configuration is capable of storing models with 24 trillion parameters – paving the way for next generation models an order of magnitude larger than GPT-4 and Gemini.

A single CS-3 can be paired with a single 1,200 TB MemoryX unit, meaning a single CS-3 rack can store more model parameters than a 10,000 node GPU cluster. This lets a single ML engineer develop and debug trillion parameter models on one machine.

The Condor Galaxy 3 uses 64 CS-3 systems with 8 exaflops and presents as a single processor with a single unified memory to the ML developer.

Cerebras has partnered with Qualcomm to develop a joint AI platform for training and inference. Models trained on the CS-3 using architectural features such as unstructured sparsity can be accelerated on Qualcomm AI 100 Ultra inference accelerators. In aggregate, LLM inference throughput is up to 10x faster.

If you enjoyed this article, you will like the following ones: don't miss them by subscribing to :

eeNews on Google News

If you enjoyed this article, you will like the following ones: don't miss them by subscribing to :

eeNews on Google News